Finches continually evolve and so does science. Follow the conversation to learn how data impacts our world view.

Tuesday, November 8, 2011

BioData at #SC11

I'll kick off the session by sharing stories from Geospiza's work experiences and the work of others. If you have a story to share please bring it. The session will provide an open platform. We plan to cover relational databases, HDF5 technologies, and NoSQL. If you want to join in because you are interested in learning, the abstract below will give you an idea of what will be discussed.

Abstract:

DNA sequencing and related technologies are producing tremendous volumes of data. The raw data from these instruments needs to be reduced through alignment or assembly into forms that can be further processed to yield scientifically or clinically actionable information. The entire data workflow process requires multiple programs and information resources. Standard formats and software tools that meet high performance computing requirements are lacking, but technical approaches are emerging. In this BoF, options such as BAM, BioHDF, VCF and other formats, and corresponding tools, will be reviewed for their utility in meeting a broad set of requirements. The goal of the BoF is look beyond DNA sequencing and discuss the requirements for data management technologies that can integrate sequence data with data collected from other platforms such as quantitative PCR, mass spectrometry, and imaging systems. We will also explore the technical requirements for working with data from large numbers of samples.

Friday, January 21, 2011

dbSNP, or is it?

The entire collection of human variants - minus 1,617,401 - can be obtained in a single VCF file. VCF stands for Variant Call Format ; it is a standard created by the 1000 Genomes project [2] to list and annotate genotypes. The vcard format also uses the “vcf” file extension . Thus, genomic vcf files have cute business card icons on Macs and Windows. Bioinformatics is fun that way.

The entire collection of human variants - minus 1,617,401 - can be obtained in a single VCF file. VCF stands for Variant Call Format ; it is a standard created by the 1000 Genomes project [2] to list and annotate genotypes. The vcard format also uses the “vcf” file extension . Thus, genomic vcf files have cute business card icons on Macs and Windows. Bioinformatics is fun that way.

So, what can we learn? We can use the dbSNPBuildNumber annotation to observe dbSNP’s growth. Cumulative growth shows that the recent builds have contributed the majority of variants. In particular, the 1000 Genomes project’s contributions account for close to 85% of the variants in dbSNP[2]. Examining the data by build number also shows that a build can consist of relatively few variants. There are even 369 variants from a future 133 build.

So, what can we learn? We can use the dbSNPBuildNumber annotation to observe dbSNP’s growth. Cumulative growth shows that the recent builds have contributed the majority of variants. In particular, the 1000 Genomes project’s contributions account for close to 85% of the variants in dbSNP[2]. Examining the data by build number also shows that a build can consist of relatively few variants. There are even 369 variants from a future 133 build.  In dbSNP, variants are described in four ways: SNP, INDEL, MIXED, and MULTI-BASE. The vast majority (82%) are SNPs, with INDELs (INsertions and DELetions) forming a large second class. When MIXED and MULTI-BASE variants are examined, they do not appear to look any different than INDELS, so I am not clear on the purpose of this tag. Perhaps it is there for bioinformatics enjoyment, because you have to find these terms in the 30MM lines; they are not listed in the VCF header. It was also interesting to learn that the longest indels are on the order of a 1000 bases.

In dbSNP, variants are described in four ways: SNP, INDEL, MIXED, and MULTI-BASE. The vast majority (82%) are SNPs, with INDELs (INsertions and DELetions) forming a large second class. When MIXED and MULTI-BASE variants are examined, they do not appear to look any different than INDELS, so I am not clear on the purpose of this tag. Perhaps it is there for bioinformatics enjoyment, because you have to find these terms in the 30MM lines; they are not listed in the VCF header. It was also interesting to learn that the longest indels are on the order of a 1000 bases.  The VCF file contains both reference and variant sequences at each position. The variant sequence can list multiple bases, or sequences, as each position can have multiple alleles. While nearly all variants are a single allele, about 3% have between two and 11 alleles. I assume the variants with the highest numbers of alleles represent complex indel patterns.

The VCF file contains both reference and variant sequences at each position. The variant sequence can list multiple bases, or sequences, as each position can have multiple alleles. While nearly all variants are a single allele, about 3% have between two and 11 alleles. I assume the variants with the highest numbers of alleles represent complex indel patterns. Given that the haploid human genome contains approximately 3.1 billion bases, the variants in dbSNP represent nearly 1% of the available positions. Their distribution across the 22 autosomes, as defined by the numbers of positions divided by the chromosome length, is fairly constant around 1%. The X and Y chromosomes and their PARs are, however much lower, with ratios of 0.45%, 0.12%, and 0.08% respectively. A likely reason for this observation is that sampling of sex chromosomes in current sequencing projects is low relative to autosomes. The number of mitochondria variants, as a fraction of genome length, is, on the other hand much higher at 3.95%.

Given that the haploid human genome contains approximately 3.1 billion bases, the variants in dbSNP represent nearly 1% of the available positions. Their distribution across the 22 autosomes, as defined by the numbers of positions divided by the chromosome length, is fairly constant around 1%. The X and Y chromosomes and their PARs are, however much lower, with ratios of 0.45%, 0.12%, and 0.08% respectively. A likely reason for this observation is that sampling of sex chromosomes in current sequencing projects is low relative to autosomes. The number of mitochondria variants, as a fraction of genome length, is, on the other hand much higher at 3.95%.It is important to point out that the 1% level of variation is simply a count of all currently known positions where variation can occur in individuals. These data are compiled from many individuals. On a per individual basis, the variation frequency is much lower with each individual having between three and four million differences when their genome is compared to the other people's genomes.

1. Sherry ST, Ward M, & Sirotkin K (1999). dbSNP-database for single nucleotide polymorphisms and other classes of minor genetic variation. Genome research, 9 (8), 677-9 PMID: 10447503

3. PAR - PseudoAutosomal Regions - are located at the ends of the X and Y chromosome. The DNA in these regions can recombine between the sex chromosomes, hence PAR genes demonstrate autosomal inheritance patterns, rather than sex inheritance patterns. You can read more at http://en.wikipedia.org/wiki/Pseudoautosomal_region

Wednesday, January 5, 2011

Databases of databases

Why?

Because we derive knowledge by integrating information in novel ways, hence we need ways to make specialized information repositories interoperate.

Why do we need standards?

Because the current and growing collection of specialized databases are poorly characterized with respect their mission, categorization, and practical use.

The Nucleic Acids Research (NAR) database of databases illustrates the problem well. For the past 18 years, every Jan 1, NAR has published a database issue in which new databases are described along with others that have been updated. The issues typically contain between 120 and 180 articles representing a fraction of the databases listed by NAR. When one counts the number of databases, explores their organization, and reads the accompanying editorial introductions several interesting observations can be made.

The Nucleic Acids Research (NAR) database of databases illustrates the problem well. For the past 18 years, every Jan 1, NAR has published a database issue in which new databases are described along with others that have been updated. The issues typically contain between 120 and 180 articles representing a fraction of the databases listed by NAR. When one counts the number of databases, explores their organization, and reads the accompanying editorial introductions several interesting observations can be made.First, over the years there has been a healthy growth of databases designed to capture many different kinds of specialized information. This year’s NAR database was updated to include 1330 databases, which are organized into 14 categories and 40 subcategories. Some categories like Metabolic and Signaling Pathways, or Organelle Databases look highly specific whereas others such as Plant Databases, or Protein Sequence Databases are general. Subcategories have similar issues. In some cases categories and subcategories make sense. In other cases it is not clear what the intent of categorization is. For example the category RNA sequence databases lists 73 databases that appear to be mostly rRNA and smallRNA databases. Within the list are a couple of RNA virus databases like the HIV Sequence Database and Subviral RNA Database. These are also listed under subcategory Viral Genome Databases in the Genomics Databases (non-vertebrates) category in an attempt to cross reference the database under different category. OK, I get that. HIV is in RNA sequences because it is an RNA virus. But what about the hepatitis, influenza, and other RNA viruses listed under Viral genome databases, why aren’t they in RNA sequences? While I’m picking on RNA sequences, how come all of the splicing databases are listed in a subcategory in Nucleotide Sequence Databases? Why isn’t RNA Sequences a subcategory under Nucleotide Sequence Databases? Isn’t RNA composed of Nucleotides? It makes one wonder how the databases are categorized.

Categorizing databases to make them easy to find and quickly grock their utility is clearly challenging. This issue becomes more profound when the level of database redundancy, determined from the databases’ names, is considered. This analysis is, of course, limited to names that can be understood. The Hollywood Database for example does not store Julia Roberts' DNA, rather it is an exon annotation database. Fortunately, many databases are not named so cleverly. Going back to our RNA Sequence category we can find many ribosomal sequence databases, several tRNA databases, and general RNA sequence databases. There is even a cluster of eight microRNA databases all starting with an “mi” or “miR” prefix. There are enough rice (18) and Arabidopsis databases (28) that they get their own subcategories. Without too much effort one can see there are many competing resources, yet choosing the ones best suited for your needs would require a substantial investment of time to understand how these databases overlap, where they are unique, and, in many cases, navigate idiosyncratic interfaces and file formats to retrieve and utilize their information. When maintenance and overall information validity is factored in, the challenge compounds.

How do things get this way?

Evolution. Software systems, like biological systems change over time. Databases like organisms evolve from common ancestors. In this way, new species are formed. Selective pressures enhance useful features and increase their redundancy, and cause extinctions. We can see these patterns in play for biological databases by examining the tables of contents and introductory editorials for the past 16 years of the NAR database issue. Interestingly, in the past six or seven years, the issue’s editor has made the point of recording the issue’s anniversary making 2011 the 18th year of this issue. Yet, easily accessible data can be obtained only to 1996, 16 years ago. History is hard and incomplete, just like any evolutionary record.

We cannot discuss database diversity without trying to count the number of species. This is hard too. NAR is an easily assessable site with a list that can be counted. However, one of the databases in the 1999 and 2000 NAR database issues was DBcat, a database of databases. At that time it tracked more than 400 and 500 databases, while NAR tracked 103 and 227 databases, respectively, a fairly large discrepancy. DBcat eventually went extinct due to lack of funding, a very significant selective pressure. Speaking of selective pressure it would be interesting to understand how databases are chosen to be included in NAR’s list. Articles are submitted, and presumably reviewed, so there is self selection and some peer review. The total number of new databases, since 1996, is 1,308, which is close to 1,330, so the current list likely an accumulation of database entries submitted over the years. From the editorial comments, the selection process is getting more selective as statements have changed from databases that might be useful (2007) to "carefully selected" (2010, 2011). Back in 2006, 2007, and 2008 there was even mention of databases being dropped due to obsolescence.

Where do we go from here?

From the current data, one would expect that 2011 will see between 60 and 120 new databases appear. Groups like BioDBcore and other committees and trying to encourage standardization with respect to a database’s meta data (name, URL, contacts, when established, data stored, standards used, and much more). This may be helpful, but when I read the list of proposed standards, I am less optimistic because the standards do not address the hard issues. Why, for example, do we need 18 different rice databases or eight “mi*” databases. For that matter, why do we have a journal called Database? NAR does a better job listing databases than Database and being a journal about databases wouldn't it be a good idea if Database tracked databases? And, critical information, like usage, last updated, citations, and user comments, which would help one more easily evaluate whether to invest time investigating the resource, are missing.

Perhaps we should think about better ways to communicate a database’s value to the community it serves, and in the case where several databases do similar things, standards committees should discuss how to bring the individual databases together to share their common features and accentuate their unique qualities to support research endeavors. After all, no amount of annotation is useful if I still have too many choices to sort through.

Tuesday, April 13, 2010

Bloginar: Standardizing Bioinformatics with BioHDF (HDF5)

The following map guides the presentation. The poster has a title and four main sections, which cover background information, specific aspects of the general Next Generation Sequencing (NGS) workflow, and HDF5’s advantages for working with large amounts of NGS data.

Section 1. The first section introduces HDF5 (Hierarchical Data Format) as a software platform for working with scientific data. The introduction begins with the abstract and lists five specific challenges created by NGS: 1) high end computing infrastructures are needed to work with NGS data, 2) NGS data analysis involves complex multi-step processes that, 3) compare NGS data to multiple reference sequence databases, 4) the resulting datasets of alignments must be visualized in multiple ways, and 5) scientific knowledge is gained when many datasets are compared.

Section 1. The first section introduces HDF5 (Hierarchical Data Format) as a software platform for working with scientific data. The introduction begins with the abstract and lists five specific challenges created by NGS: 1) high end computing infrastructures are needed to work with NGS data, 2) NGS data analysis involves complex multi-step processes that, 3) compare NGS data to multiple reference sequence databases, 4) the resulting datasets of alignments must be visualized in multiple ways, and 5) scientific knowledge is gained when many datasets are compared. Next, choices for managing NGS data are compared in a four category table. These include text and binary formats. While text formats (delimited and XML) have been popular for bioinformatics, they do not scale well and binary formats are gaining in popularity. The current bioinformatics binary formats are listed (bottom left) along with a description of their limitations.

The introduction closes with a description of HDF5 and its advantages for supporting NGS data management and analysis. Specifically, HDF5 is platform for managing scientific data. Such data are typically complex and consist of images, large multi-dimensional arrays, and meta data. HDF5 has been used for over 20 years in other data intensive fields; it is robust, portable, and tuned for high performance computing. Thus HDF5 is well suited for NGS. Indeed, groups from academic researchers to NGS instrument vendors, and software companies are recognizing the value of HDF5.

Section 2. This section illustrates how HDF5 facilitates primary data analysis. First we are reminded that NGS data are analyzed in three phases: primary analysis, secondary analysis and tertiary analysis. Primary analysis is the step that converts images to reads consisting of basecalls (or colors, or flowgrams), and quality values. In secondary analysis, reads are aligned to reference data (mapped) or amongst themselves (assembled). In many NGS assays, secondary analysis produces tables of alignments that must be compared to one and other, in tertiary analysis, to gain scientific insights.

Section 2. This section illustrates how HDF5 facilitates primary data analysis. First we are reminded that NGS data are analyzed in three phases: primary analysis, secondary analysis and tertiary analysis. Primary analysis is the step that converts images to reads consisting of basecalls (or colors, or flowgrams), and quality values. In secondary analysis, reads are aligned to reference data (mapped) or amongst themselves (assembled). In many NGS assays, secondary analysis produces tables of alignments that must be compared to one and other, in tertiary analysis, to gain scientific insights. The remaining portion of section 2 shows how Illumina GA and SOLiD primary data (reads and quality values) can be stored in BioHDF and later reviewed using the BioHDF tools and scripts. The resulting quality graphs are organized into three groups (left to right) to show base composition plots, quality value (QV) distribution graphs, and other summaries.

Base composition plots show the count of each base (or color) that occurs at a given position in the read. These plots are used to assess overall randomness of a library and observe systematic nucleotide incorporation errors or biases.

Quality value plots show the distribution of QVs at each base position within the ensemble of reads. As each NGS run produces many millions of reads, it is worthwhile summarizing QVs in multiple ways. The first plots, from the top, show the average QV per base with error bars indicating QVs that are within one standard deviation of the mean. Next, box and whisker plots show the overall quality distribution (median, lower and upper quartile, minimum and maximum values) at each position. These plots are followed by “error” plots which show the total count of QVs below certain thresholds (red, QV < 10; green QV < 20; blue, QV < 30). The final two sets of plots show the number of QVs at each position for all observed values and the number of bases having each quality value.

The final group of plots show overall dataset complexity, GC content (base space only), average QV/read, and %GC vs average QV (base space only). Dataset complexity is computed by determining the number of times a given read exactly matches other reads in the dataset. In some experiments, too many identical reads indicates a problem like PCR bias. In other cases, like tag profiling, many identical reads are expected from highly expressed genes. Errors in the data can artificially increase complexity.

Section 3. Primary data analysis gives us a picture of how well the samples were prepared or how well the instrument ran with some indication about sample quality. Secondary and tertiary analysis tell us about sample quality and more importantly, provides biological insights. The third section focuses on secondary and tertiary analysis and begins with a brief cartoon showing a high level data analysis workflow using BioHDF to store primary data, alignment results, and annotations. BioHDF tools are used to query these data and other software within GeneSifter is used to compare data between samples and display the data in interactive reports to examine the details from single or multiple samples.

Section 3. Primary data analysis gives us a picture of how well the samples were prepared or how well the instrument ran with some indication about sample quality. Secondary and tertiary analysis tell us about sample quality and more importantly, provides biological insights. The third section focuses on secondary and tertiary analysis and begins with a brief cartoon showing a high level data analysis workflow using BioHDF to store primary data, alignment results, and annotations. BioHDF tools are used to query these data and other software within GeneSifter is used to compare data between samples and display the data in interactive reports to examine the details from single or multiple samples. The left side of this section illustrates what is possible with single samples. Beginning with a simple table that indicates how many reads align to each reference sequence, we can drill into multiple reports that provide increasing detail about the alignments. For example, the gene list report (second from top) uses gene model annotations to summarize the alignments for all genes identified in the dataset. Each gene is displayed as a thumbnail graphic that can be clicked to see greater detail, which is shown in the third plot. The Integrated Gene View not only shows the density of reads across the gene's genomic region, but also shows evidence of splice junctions, and identified single base differences (SNVs) and small insertions and deletions (indels). Navigation controls provide ways to zoom into and out of the current view of data, and move to new locations. Additionally, when possible, the read density plot is accompanied by an Entrez gene model and dbSNP data so that data can be observed in a context of known information. Tables that describe the observed variants follow. Clicking on a variant drills into the alignment viewer to show the reads encompassing the point of variation.

The right side illustrates multi-sample analysis in GeneSifter. In assays like RNA-Seq, alignment tables are converted to gene expression values that can be compared between samples. Volcano (top) and other plots are used visualize the differences between the datasets. Since each point in the volcano plot represents the difference in expression for a gene between two samples (or conditions), we can click on that point to view the expression details for that gene (middle) in the different samples. In the case of RNA-Seq, we can also obtain expression values for the individual exons with the gene, making it possible to observe differential exon levels in conjunction with overall gene expression levels (middle). Clicking the appropriate link in the exon expression bar graph, takes us to the alignment details for the samples being analyzed (bottom), in this example we have two cases and two control replicates. Like the single sample Integrated Gene Views, annotations are displayed with alignment data. When navigation buttons are clicked all of the displayed genes move together so that you can explore the gene's details and surrounding neighborhood for multiple samples in a comparative fashion.

Finally, the poster is summarized with a number of take home points. These reiterate the fact that NGS is driving the need to use binary file formats to manage NGS and analysis results and that HDF5 provides an attractive solution because of its long history and development efforts that specifically target scientific programming requirements. In our hands, HDF5 has helped make GeneSifter a highly scalable and interactive web-application with less development effort than would have been needed to implement other technologies.

If you are software developer and are interested in BioHDF please visit www.biohdf.org. If you do not want to program and instead, want a way to easily analyze your NGS data to make new discoveries, please contact us.

Wednesday, February 17, 2010

Standardizing the Next Generation of Bioinformatics Software Development With BioHDF (HDF5)

Abstract

- An overview of NGS data analysis and workflows

- A prototype data model for working with NGS data

- Practical examples of data analysis and viewing information using the underlying framework

- Performance benchmarks comparing HDF5 to other file formats

Monday, January 18, 2010

Systems Biology with HDF5

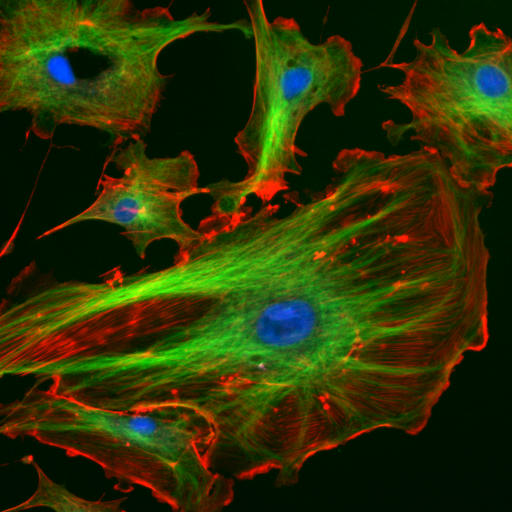

The Association for Computing Machinery (ACM) recently published an article, "Unifying Biological Image Formats with HDF5," that argues for using HDF5 and HDF tools as a common framework for working with image files. This article is worth reading for several reasons.

The Association for Computing Machinery (ACM) recently published an article, "Unifying Biological Image Formats with HDF5," that argues for using HDF5 and HDF tools as a common framework for working with image files. This article is worth reading for several reasons.First, it provides a nice introduction and background to HDF5, its origins, and movement towards becoming an ISO standard. HDF5's technical features are also included in this discussion.

Next, a brief history of the imaging community is covered to share how X-ray crystallographers, electron, and optical microscopists had all independently considered HDF5 as a framework for their next-generation image file formats. Through this discussion, the challenges that have been identified within the imaging community are listed.

Like genomics, the amounts of data being collected are ever increasing, current formats are inflexible and difficult to adapt to future modalities and dimensionality, and the nonarchival quality of data undermines long-term value. That is, current data typically lack sufficient metadata about their origins and experiments to be useful in the long-term.

The article goes on to make the point that current challenges with image data could be addressed if the community adopts an existing format that can support both generic and specialized data formats and meet a set of common requirements related to performance, interoperability, and archiving. Examples of how HDF5 meets these requirements are included. Briefly, HDF5's data caching can be used to overcome computation bottlenecks related to the fact that image sizes are exceeding RAM capacity. Interoperability issues can be addressed through HDF5's ability to store multiple metadata schemas in flexible ways. And, because HDF5 is self describing, data stored in HDF5 can be better preserved.

Finally, a barrier to moving to a new technology is supporting legacy applications that may be costly to replace. Thus, the article closes with a creative proposal for supporting legacy software applications and recommendations for future development. HDF5 files could support legacy software applications if they were able to present the data, stored within the HDF5 file, as the collection of directories and files required by the legacy application. This could be accomplished by developing an abstraction layer that could interact with FUSE (Filesystem in User Space) and essentially mount the HDF5 file as a virtual file system. Such a scenario is only possible because data are stored in HDF5 in a general way that can be further abstracted and presented in multiple specific ways.

While this article focused on issues related to image formats, there are many parallels that the genomics and Next Generation Sequencing communities should pay attention to, and if you are a bioinformatics software developer or running bioinformatics projects, you should put this paper on your must read list.

Sunday, November 8, 2009

Expeditiously Exponential: Data Sharing and Standardization

We can all agree that our ability to produce genomics and other kinds of data is increasing at exponential rates. Less clear, is understanding the consequences for how these data will be shared and ultimately used. These topics were explored in last month's (Oct. 9, 2009) policy forum feature in the journal Science.

The first article, listed under the category "megascience," dealt with issues about sharing 'omics data. The challenge being that systems biology research demands that data from many kinds of instrument platforms (DNA sequencing, mass spectrometry, flow cytometry, microscopy, and others) be combined in different ways to produce a complete picture of a biological system. Today, each platform generates its own kind of "big" data that, to be useful, must be computationally processed and transformed into standard outputs. Moreover, the data are often collected by different research groups focused on particular aspects of a common problem. Hence, the full utility of the data being produced can only be realized when the data are made open and shared throughout the scientific community. The article listed past efforts in developing sharing policies and the central table included 12 data sharing policies that are already in effect.

Sharing data solves half of the problem, the other aspect is being able to use the data once shared. This requires that data be structured and annotated in ways that make it understandable by a wide range of research groups. Such standards typically include minimum information check lists that define specific annotations, and which data should be kept from different platforms. The data and metadata are stored in structured documents that reflect a community's view about what is important to know with respect to how data were collected and the samples the data were collected from. The problem is that annotation standards are developed by diverse groups and, like the data, are expanding. This expansion creates new challenges with making data interoperable; the very problem standards try to address.

The article closed with high-level recommendations for enforcing policy through funding and publication requirements and acknowledged that full compliance requires that general concerns with pre-publication data use and patient information be addressed. More importantly, the article acknowledged that meeting data sharing and formatting standards has economic implications. That is, researches need time-efficient data management systems, the right kinds of tools and informatics expertise to meet standards. We also need to develop the right kind of global infrastructure to support data sharing.

Fortunately complying with data standards is an area where Geospiza can help. First, our software systems rely on open, scientifically valid tools and technologies. In DNA sequencing we support community developed alignment algorithms. The statistical analysis tools in GeneSifter Analysis Edition utilize R and BioConductor to compare gene expression data from both microarrays and DNA sequencing. Further, we participate in the community by contributing additional open-source tools and standards through efforts like the BioHDF project. Second, the GeneSifter Analysis and Laboratory platforms provide the time-effiecient data management solutions needed to move data through its complete life cycle from collection, to intermediate analysis, to publishing files in standard formats.GeneSifter lowers researcher's economic barriers of meeting data sharing and annotation standards keep the focus on doing good science with the data.

Tuesday, October 13, 2009

Super Computing 09 and BioHDF

Developing Bioinformatics Applications with BioHDF

In this session we will present how HDF5 can be used to work with large volumes of DNA sequence data. We will cover the current state of bioinformatics tools that utilize HDF5 and proposed extensions to the HDF5 library to create BioHDF. The session will include a discussion of requirements that are being be considered to develop a data models for working with DNA sequence alignments to measure variation within sets of DNA sequences.

In this session we will present how HDF5 can be used to work with large volumes of DNA sequence data. We will cover the current state of bioinformatics tools that utilize HDF5 and proposed extensions to the HDF5 library to create BioHDF. The session will include a discussion of requirements that are being be considered to develop a data models for working with DNA sequence alignments to measure variation within sets of DNA sequences.HDF5 is an open-source technology suite for managing diverse, complex, high-volume data in heterogeneous computing and storage environments. The BioHDF project is investigating the use of HDF5 for working with very large scientific datasets. HDF5 provides a hierarchical data model, binary file format, and collection of APIs supporting data access. BioHDF will extend HDF5 to support DNA sequencing requirements.

Initial prototyping of BioHDF has demonstrated clear benefits. Data can be compressed and indexed in BioHDF to reduce storage needs and enable very rapid (typically, few millisecond) random access into these sequence and alignment datasets, essentially independent of the overall HDF5 file size. Additional prototyping activities we have identified key architectural elements and tools that will form BioHDF.

The BoF session will include a presentation of the current state of BioHDF and proposed implementations to encourage discussion of future directions.

Sunday, September 6, 2009

Open or Closed

Open scientific technologies include open-source and published academic algorithms, programs, databases, and core infrastructure software such as operating systems, web servers, and other components needed to build modern systems for data management. Unlike approaches that rely on proprietary software, Geospiza’s adoption of open platforms and participation in the open-source community benefits our customers in numerous ways.

Geospiza’s Open Source History

When Geospiza began in 1997, the company started building software systems to support DNA sequencing technologies and applications. Our first products focused on web-enabled data management for DNA sequencing-based genomics applications. Foundational infrastructure, such as the web-server, and application layer incorporated Apache and Perl. We were also leaders, in that our first systems operated on Linux, an open-source UNIX-based operating system. In those early days, however, we used proprietary databases such as Solid and Oracle because the open-source alternatives Postgres and MySQL were still lacking features needed to support robust data processing environments. As these products matured, we extended our application support to include Postgres to deliver cost-effective solutions for our customers. By adopting such open platforms we were able to deliver robust, high performing systems, rapidly at a reasonable cost.

When Geospiza began in 1997, the company started building software systems to support DNA sequencing technologies and applications. Our first products focused on web-enabled data management for DNA sequencing-based genomics applications. Foundational infrastructure, such as the web-server, and application layer incorporated Apache and Perl. We were also leaders, in that our first systems operated on Linux, an open-source UNIX-based operating system. In those early days, however, we used proprietary databases such as Solid and Oracle because the open-source alternatives Postgres and MySQL were still lacking features needed to support robust data processing environments. As these products matured, we extended our application support to include Postgres to deliver cost-effective solutions for our customers. By adopting such open platforms we were able to deliver robust, high performing systems, rapidly at a reasonable cost.In addition to using open-source technology as the foundation of our infrastructure, we also worked with open tools to deliver our scientific applications. Our first product, the Finch Blast-Server, utilized the public domain BLAST from NCBI. Where possible, we sought to include well-adopted tools for other applications such as base calling and sequence assembly and repeat masking, for which the source code was made available. We favored these kinds tools over developing our own proprietary tools, because it was clear that technologies emerging from communities like the genome centers would advance much quicker and be better tuned to the problems people were trying to address. Further, these tools, because of their wide adoption within their community and publication, received higher levels of scrutiny and validation than their proprietary counterparts.

Times Change

In the early days, many of the genome center tools were licensed by universities. As the bioinformatics field matured, open-source models for delivering bioinformatics software have become more popular. Led by NCBI and pioneered by organizations like TIGR (now JCVI) and the Sanger institute, the majority of useful bioinformatics programs are now being delivered open-source either under GPL, BSD like, or Perl Artistic style licenses (www.opensource.org). The authors of these programs have benefited from wider adoption of their programs and continued support from funding agencies like NIH. In some cases other groups are extending best-of-breed technologies into new applications.

A significant benefit of the increasing use of open-source licensing is that a large number of analytical tools are readily available for many kinds of applications. Today we have robust statistical platforms like R and BioConductor and several algorithms for aligning Next Gen Sequencing (NGS) data. Because these platforms and tools are truly open-source, bioinformatics groups can easily access these technologies to understand how they work and compare other approaches to their own. This creates a competitive environment for bioinformatics tool providers that drives improvements in algorithm performance and accuracy and the research community benefits greatly.

Design Choices

Early on, Geospiza recognized value incorporating tools from the academic research community into our user friendly software systems. Such tools were being developed in close collaboration with the data production centers that were trying to solve scientific problems associated with DNA sequence assembly and analysis. Companies developing proprietary tools designed to compete with these efforts were at a disadvantage, because they did not have real time access to conversations between biologists, lab specialists, and mathematicians needed to quickly develop the deep experience of working with biologically complex data. This disadvantage continues today. Further, the closed nature of proprietary software limits the ability to publish work and have critical peer review of the code needed to ensure scientific validation.

Our work could proceed more quickly because we did not have to invest in solving the research problems associated with developing algorithms. Moreover, we did not have to invest in proving the scientific credibility of an algorithm. Instead we could cite published references and keep our focus on solving problems associated delivering the user interfaces needed to work with the data. Our customers benefited by gaining easy access to best-of-breed tools and having the knowledge that they had a community to draw on to understand their scientific basis.

Geospiza continues its practice of adopting open best-of-breed technologies. Our NGS systems utilize multiple tools such as MAQ, BWA, Bowtie, MapReads and others. GeneSifter Analysis Edition utilizes routines from the R and BioConductor package to perform statistical computations to compare datasets from microarray and NGS experiments. In addition, we are addressing issues related to high performance computing through our collaboration with the HDF Group and the BioHDF project. In this case we are not only adopting open-source technology, but also working with leaders in the field to make open-source contributions of our own.

When you use Geospiza’s GeneSifter products you can be assured that you are using the same tools as the leaders in our fields to receive the benefits of reducing data analysis costs combined with the advantages of community support through forums and peer reviewed literature.

Sunday, August 16, 2009

BioHDF on the Web

Presentations:

Presentations by Mark Welsh, and myself can be found at SciVee.

Mark presents our poster at ISMB , and I present our work at the “Sequencing, Finishing and Analysis in the Future Meeting,” in Santa Fe.

We also presented at this and last year’s BOSC meetings that were held at ISMB. The abstracts and slides can be found at:

What others are thinking:

Real time commentary on the 2009 BOSC presentation can be found at friendfeed and another post. The Fisheye Perspective considers how HDF5 fits with semantic web tools.

HDF in Bioinformatics:

Check out Fast5 for using HDF5 to store sequences and base probablities.

BioHDF in the News:

Genome Web and Bioinform articles on HDF5 or referencing HDF5 include:

- NHGRI Awards Grant to Geospiza-HDF Collaboration

- Geospiza, HDF Group Snag $1.1M NHGRI Grant to Develop Scalable Data Format for Second-gen Sequencing

- Bioinformatics Pros: Labs Should Include Data Management in All Project Plans

FinchTalk:

Links to FinchTalks about BioHDF from 2008 t0 present include:

Bloginar: Scalable Bioinformatics Infrastructures with BioHDF.

- Part I: Introduction

- Part II: Background

- Part III: The HDF5 Advantage

- Part IV: HDF5 Benefits

- Part V: Why HDF5?

You can learn more about HDF5 and get the software and tools at:

Sunday, July 12, 2009

Bloginar: Scalable Bioinformatics Infrastructures with BioHDF. Part V: Why HDF5?

For previous posts, see:

- The introduction

- Project background

- Challenges of working with NGS data

- HDF5 benefits for working with NGS data

Why HDF5?

As previously discussed, HDF technology is designed for working with large amounts of complex data that naturally organize into multidimensional arrays. These data are composed of discrete numeric values, strings of characters, images, documents, and other kinds of data that must be compared in different ways to extract scientific information and meaning. Software applications that work with such data must meet a variety of organization, computation, and performance requirements to support the communities of researchers where they are used.

When software developers build applications for the scientific community, they must decide between creating new file formats and software tools for working with the data, or adapting existing solutions that already meet a general set of requirements. The advantage of developing software that's specific to an application domain is that highly optimized systems can be created. However, this advantage can disappear when significant amounts of development time are needed to deal with the "low-level" functions of structuring files, indexing data, tracking bits and bytes, making the system portable across different computer architectures, and creating a basic set of tools to work with the data. Moreover, such a system would be unique, with only a small set of users and developers able to understand and share knowledge concerning its use.

The alternative to building a highly optimized domain-specific application system is to find and adapt existing technologies, with a preference for those that are widely used. Such systems benefit from the insights and perspective of many users and will often have features in place before one even realizes they are needed. If a technology has widespread adoption, there will likely be a support group and knowledge base to learn from. Finally, it is best to choose a solution that has been tested by time. Longevity is a good measure of the robustness of the various parts and tools in the system.

HDF: 20 Years in Physical Sciences

HDF: 20 Years in Physical SciencesOur requirements for high-performance data management and computation system are these:

- Different kinds of data need to be stored and accessed.

- The system must be able to organize data in different ways.

- Data will be stored in different combinations.

- Visualization and computational tools will access data quickly and randomly.

- Data storage must be scalable, efficient, and portable across computer platforms.

- The data model must be self describing and accessible to software tools.

- Software used to work with the data must be robust, and widely used.

HDF5 is a natural fit. The file format and software libraries are used in some of the largest data management projects known to date. Because of its strengths, HDF5 is independently finding its way into other bioinformatics applications and is a good choice for developing software to support NGS.

HDF5 software provides a common infrastructure that allows different scientific communities to build specific tools and applications. Applications using HDF5 typically contain three parts: one or more HDF5 files to store data, a library of software routines to access the data, and the tools, applications and additional libraries to carry out functions that are specific to a particular domain. To implement an HDF5-based application, a data model be developed along with application specific tools such as user interfaces and unique visualizations. While implementation can be a lot of work in its own right, the tools to implement the model and provide scalable, high-performance programmatic access to the data have already been developed, debugged, and delivered through the HDF I/O (input/output) library.

HDF5 software provides a common infrastructure that allows different scientific communities to build specific tools and applications. Applications using HDF5 typically contain three parts: one or more HDF5 files to store data, a library of software routines to access the data, and the tools, applications and additional libraries to carry out functions that are specific to a particular domain. To implement an HDF5-based application, a data model be developed along with application specific tools such as user interfaces and unique visualizations. While implementation can be a lot of work in its own right, the tools to implement the model and provide scalable, high-performance programmatic access to the data have already been developed, debugged, and delivered through the HDF I/O (input/output) library.In earlier posts, we presented examples where we needed to write software to parse fasta formatted sequence files and output files from alignment programs. These parsers then called routines in the HDF I/O library to add data to the HDF5 file. During the import phase, we could set different compression levels and define the chunk size to compress our data and optimize access times. In these cases, we developed a simple data model based on the alignment output from programs like BWA, Bowtie, and MapReads. Most importantly, we were able to work with NGS data from multiple platforms efficiently, with software that required weeks of development rather than the months and years that would be needed if the system was built from scratch.

While HDF5 technology is powerful "out-of-the-box," a number of features can still be added to make it better for bioinformatics applications. The BioHDF project is about making such domain-specific extensions. These are expected to include modifications to the general file format to better support variable data like DNA sequences. I/O library extensions will be created to help HDF5 "speak" bioinformatics by creating APIs (Application Programming Interfaces) that understand our data. Finally, sets of command line programs and other tools will be created to help bioinformatics groups get started quickly with using the technology.

To summarize, the HDF5 platform is well-suited for supporting NGS data management and analysis applications. Using this technology, groups will be able to make their data more portable for sharing because the data model and data storage are separated from the implementation of the model in the application system. HDF5's flexibility for the kinds of data it can store, makes it easier to integrate data from a wide variety of sources. Integrated compression utilities and data chunking make HDF5-based systems as scalable as they can be. Finally, because the HDF5 I/O library is extensive and robust, and the HDF5 tool kit includes basic command-line and GUI tools, a platform is provided that allows for rapid prototyping, and reduced development time, thus making it easier to create new approaches for NGS data management and analysis.

To summarize, the HDF5 platform is well-suited for supporting NGS data management and analysis applications. Using this technology, groups will be able to make their data more portable for sharing because the data model and data storage are separated from the implementation of the model in the application system. HDF5's flexibility for the kinds of data it can store, makes it easier to integrate data from a wide variety of sources. Integrated compression utilities and data chunking make HDF5-based systems as scalable as they can be. Finally, because the HDF5 I/O library is extensive and robust, and the HDF5 tool kit includes basic command-line and GUI tools, a platform is provided that allows for rapid prototyping, and reduced development time, thus making it easier to create new approaches for NGS data management and analysis.

For more information, or if you are interested in collaborating on the BioHDF project, please feel free to contact me (todd at geospiza.com).

Sunday, June 7, 2009

Bloginar: Scalable Bioinformatics Infrastructures with BioHDF. Part I: Introduction

Over the next few posts I will share the slides from the presentation. This post begins with the abstract.

Abstract

“If the data problem is not addressed, ABI’s SOLiD, 454’s GS FLX, Illumina’s GAII or any of the other deep sequencing platforms will be destined to sit in their air-conditioned rooms like a Stradivarius without a bow” was the closing statement in the lead Nature Biotechnology editorial “Prepare for the deluge” (Oct. 2008). The oft-stated challenges focus on the obvious problems of storing and analyzing data. However, the problems are much deeper than the short descriptions portray. True, researchers are ill-prepared to confront the challenges of inadequate IT infrastructures, but there is a greater challenge in that there is a lack of easy to use, well-performing software systems and interfaces that would allow to researchers to work with data in multiple ways to summarize information and drill down into supporting details.

Meeting the above challenge requires that we have well performing software frameworks and underlying data management tools to store and organize data in better ways than complex mixtures of flat files and relational databases. Geospiza and The HDF Group are collaborating to develop open-source, portable, scalable, bioinformatics technologies based on HDF5 (Hierarchical Data Format – http://www.hdfgroup.org). We call these extensible domain-specific data technologies “BioHDF.” BioHDF will implement a data model that supports primary DNA sequence information (reads, quality values, meta data) and the results from sequence alignment and variation detection algorithms. BioHDF will extend HDF5 data structures and library routines with new features (indexes, additional compression, graph layouts) to support the high performance data storage and computation requirements of Next Gen Sequencing.

For close to 20 years, HDF data formats and software infrastructure have been used to manage and access high volume complex data in hundreds of applications, from flight testing to global climate research. The BioHDF effort is leveraging these strengths. We will show data from small RNA and gene expression analyses that demonstrate HDF5’s value for reducing the space, time, bandwidth, and development costs associated with working with Next Gen Sequence data.

The next posts will cover:

- Why NGS is exiting and challenges that can be overcome with HDF5

- What the BioHDF project is and some examples of what we are doing with HDF5

- Some background on HDF5 (Hierarchical Data Format)

Sunday, February 15, 2009

Three Themes from ABRF and AGBT Part I: The Laboratory Challenge

- The Laboratory: Running successful experiments requires careful attention to detail.

- Bioinformatics: Every presentation called out bioinformatics as a major bottleneck. The data are hard to work with and different NGS experiments require different specialized bioinformatics workflows (pipelines).

- Information Technology (IT): The bioinformatics bottleneck is exacerbated by IT issues involving data storage, computation, and data transfer bandwidth.

We kicked off ABRF by participating in the Next Gen DNA Sequencing workshop on Saturday (Feb. 7). It was extremely well attended with presentations on experiences in setting up labs for Next Gen sequencing, preparing DNA libraries for sequencing, and dealing with the IT and bioinformatics.

I had the opportunity to provide the “overview” talk. In that presentation “From Reads to Datasets, Why Next Gen is not Sanger Sequencing,” I focused on the kinds of things you can do with NGS technology, its power, and the high level issues that groups are facing today when implementing these systems. I also introduced one of our research projects on developing scalable infrastructures using HDF5 for Next Gen bioinformatics and high-performing, dynamic, software interfaces. Three themes resufraced again and again throughout the day: one must pay attention to laboratory details, bioinformatics is a bottleneck, and don't underestimate the impact of NGS systems on IT.

In this post, I'll discuss the laboratory details and visit the other themes in posts to come.

Laboratory Management

To better understand the impact of NGS on the lab, we can compare it to Sanger sequencing. In the table below, different categories ranging from the kinds of samples, to their preparation, to the data, are considered to show how NGS differs from Sanger sequencing. Sequencing samples for example are very different between Sanger and NGS. In Sanger sequencing, one typically works with clones or PCR amplicons. Each sample (clone or PCR product) produces a single sequence read. Overall, sequencing systems are robust, so the biggest challenges to labs has been tracking the samples as they move from tube to plate or between wells within plates.

In contrast, NGS experiments involve sequencing DNA libraries and each sample produces millions of reads. Presently, only a few samples are sequenced at a time so the sample tracking issues, when compared to Sanger, are greatly reduced. Indeed, one of the significant advantages and cost savings of NGS is to eliminate the need for cloning or PCR amplification in preparing templates to sequence.

Directly sequencing DNA libraries is a key ability and a major factor that makes NGS so powerful. It also directly contributes to the bioinformatics complexity (more on that in the next post). Each one of the millions of reads that are produced from the sample corresponds to an individual molecule, present in the DNA library. Thus, the overall quality of the data and the things you can learn are a direct function of the library.

Producing good libraries requires that you have a good handle on many factors. To begin, you will need to track RNA and DNA concentrations, at different steps of the process. You also need to know the “quality” of the molecules in the sample. For example, RNA assays will give the best results when RNA is carefully prepared and free of RNAses. In RNA-Seq, the best results are obtained when the RNA is fragmented prior to cDNA synthesis. To understand the quality of the starting RNA, fragmentation, and cDNA synthesis steps, tools like agarose gels or Bioanalyzer traces are used to evaluate fragment lengths and determine overall sample quality. Other assays and sequencing projects have similar processes. Throughout both conferences, it was stressed that regardless of whether you are sequencing genomes, small RNAs, performing an RNA-Seq, or other “tag and count” kinds of experiments, you need to pay attention to the details of the process. Tools like the NanoDrop, or QPCR procedure need to be routinely used to measure RNA or DNA concentration. Tools like gels and the Bioanalyzer are used to measure sample quality. And, in many cases both kinds of tools are used.

Through many conversations, it became clear that Bioanalyzer images, Nanodrop reports, and other lab data quickly accumulate during these kinds of experiments. While an NGS experiment is in progress, these data are pretty accessible and the links between data quality and the collected data are easy to see. It only takes a few weeks, however, for these lab data to disperse. They find their way into paper notebooks, or unorganized folders on multiple computers. When the results from one sample need to be compared to another, a new problem appears. It becomes harder and harder to find the lab data that correspond to each sample.

To summarize, NGS technology makes it possible to interrogate large ensembles of individual RNA or DNA molecules. Different questions can be asked by preparing the ensembles (libraries) in different ways involving complex procedures. To ensure that the resulting data are useful, the libraries need to be of high and known quality. Quality is measured with multiple tools at different points of the process to produce multiple forms of laboratory data. Traditional methods such as laboratory notebooks, files on computers, and post-it notes however, make these data hard to find when the time comes to compare results between samples.

Fortunately, the GeneSifter Lab Edition solves these challenges. The Lab Edition of Geospiza’s software platform provides a comprehensive laboratory information management system (LIMS) for NGS and other kinds of genetic analysis assays, experiments, and projects. Using web-based interfaces, laboratories can define protocols (laboratory workflows) with any number of steps. Steps may be ordered and required to ensure that procedures are correctly followed. Within each step, the laboratory can define and collect different kinds of custom data (Nanodrop values, Bioanalyzer traces, gel images, ...). Laboratories using the GeneSifter Lab Edition can produce more reliable information because they can track the details of their library preparation and link key laboratory data to sequencing results.

Wednesday, January 28, 2009

The Next Generation Dilemma: Large Scale Data Analysis

Abstract

The volumes of data that can be obtained from Next Generation DNA sequencing instruments make several new kinds of experiments possible and new questions amenable to study. The scale of subsequent analyses, however, presents a new kind of challenge. How do we get from a collection of several million short sequences of bases to genome-scale results? This process involves three stages of analysis that can be described as primary, secondary, and tertiary data analyses. At the first stage, primary data analysis, image data are converted to sequence data. In the middle stage, secondary data analysis, sequences are aligned to reference data to create application-specific data sets for each sample. In the final stage, tertiary data analysis, the data sets are compared to create experiment-specific results. Currently, the software for the primary analyses is provided by the instrument manufacturers and handled within the instrument itself, and when it comes to the tertiary analyses, many good tools already exist. However, between the primary and tertiary analyses lies a gap.

In RNA-Seq, the process of determining relative gene expression means that sequence data from multiple samples must go through the entire process of primary, secondary, and tertiary analysis. To do this work, researchers must puzzle through a diverse collection of early version algorithms that are combined into complicated workflows with steps producing complicated file formats. Command line tools such as MAQ, SOAP, MapReads, and BWA, have specialized requirements for formatted input and output and leave researchers with large data files that still require additional processing and formatting for tertiary analyses. Moreover, once reads are aligned, datasets need to be visualized and further refined for additional comparative analysis. We present a solution to these challenges that closes the gaps between primary, secondary, and tertiary analysis by showing results from a complete workflow system that includes data collection, processing and analysis for RNA-seq.

And, if you cannot be in sunny Florida, join us in Memphis where we will help kick off the ABRF conference with a workshop on Next Generation DNA Sequencing. I'm kicking the workshop off with a talk entitled "From Reads to Data Sets, Why Next Gen is Not Like Sanger Sequencing."

Wednesday, July 30, 2008

BioHDF at BOSC

The scale of Next Gen sequencing is only going to increase, hence we need to fundamentally change the way we work with Next Gen data. New software systems with scalable data models, APIs, software tools, and viewers are needed to support the very large datasets used by the applications that analyze Next Gen DNA sequence data.

The scale of Next Gen sequencing is only going to increase, hence we need to fundamentally change the way we work with Next Gen data. New software systems with scalable data models, APIs, software tools, and viewers are needed to support the very large datasets used by the applications that analyze Next Gen DNA sequence data.That was the theme of a talk I presented at the BOSC (Bioinformatics Open Source Conference) meeting that preceded ISMB (Intelligent Systems for Molecular Biology) in Toronto, Canada, July 19th. You can get the slides from the BOSC site. At the same time, we posted a blog on Genographia, a next-generation genomics community web site devoted to Next Gen sequencing discussions and idea sharing. The key points are summarized below.

Motivation

The BioHDF project is motivated by the fact that the next and future generations of data collection technologies, like DNA sequencing, are creating ever increasing amounts of data. Getting meaningful information from these data require that multiple programs be used in complex processes. Current practices for working with these data create many kinds of challenges, ranging from managing large numbers of files and formats to having the computation power and bandwidth to make calculations and move data around. These practices have a high cost in terms of storage, CPU, and bandwidth efficiency. In addition, they require significant human effort in understanding idiosyncratic program behavior and output formats

Is there a better way?

Many would agree that if we could reduce the number of file formats, avoid data duplication, and improve how we access and process data, we could develop better performing and more interoperable applications. Doing so requires improved ways of storing data and making it accessible to programs. For a number of years we have thought about these goals might be accomplished and looked to other data-intensive fields to see how others have solved these problems. Our search ended when we found HDF (hierarchical data format), a standard file format and library used in the physical and earth sciences.

BioHDF

HDF5 can be used in many kinds of bioinformatics applications. For specialized areas, like DNA sequencing, domain specific extensions will be needed. BioHDF is about developing those extensions, through community support, to create a file format and accompanying library of software functions that are needed to build the scalable software applications of the future. More will follow, if you are interested contact me: todd at geospiza.com.

Sunday, March 2, 2008

Genotyping with HDF

Organizing Data - A resequencing project begins with a region of a genome, a gene or set of genes that will be studied. A researcher will have sets of samples from patient populations from which they will isolate DNA. PCR is used to amplify specific regions of DNA so that both chromosomal copies can be sequenced. The read data, obtained from chromatograms, are aligned to other reads and also to a reference sequence. Quality and trace information are used to predict whether the differences observed between reads and reference data are meaningful. The general data organization can be broken into a main part called the Gene Model and within it, two sub organizations: the reference and experimental data. The reference consists of the known state of information. Resequencing, by definition, focuses on comparing new data with a reference model. The reference model organizes all of the reference data including the reference sequence and annotations.

The sub organizations of data can be stored in HDF5 using its standard features. The two primary objects in HDF5 are "groups" and "datasets." The HDF5 group object is akin to a UNIX directory or Windows folder – it is a container for other objects. An HDF5 dataset is an array structure for storing data and has a rich set of types available for defining the elements in an HDF dataset array. They include simple scalars (e.g., integers and floats), fixed- and variable-length structures (e.g., strings), and "compound datatypes." A compound datatype is a record structure, whose fields can be any other datatype, including other compound types. Datasets and groups can contain attributes, which are simple name/value pairs for storing metadata. Finally, groups can be linked to any dataset or group in an HDF file, making it possible to show relationships among objects. These HDF objects allowed us to create an HDF file whose structure matched the structure and content of the Gene Model structure. Although the content of a Gene Model file is quite extensive and complex, the grouping and dataset structure of HDF makes it very easy to see the overall organization of the experiment. Since all the pieces are in one place, an application, or someone browsing the file, can easily find and access the particular data of interest. The figure to the left shows the HDF5 file structure we used. The ovals represent groups and the rectangles represent datasets. The grayed out groups (Genotyping, Expression, Proteomics) were not implemented.

The sub organizations of data can be stored in HDF5 using its standard features. The two primary objects in HDF5 are "groups" and "datasets." The HDF5 group object is akin to a UNIX directory or Windows folder – it is a container for other objects. An HDF5 dataset is an array structure for storing data and has a rich set of types available for defining the elements in an HDF dataset array. They include simple scalars (e.g., integers and floats), fixed- and variable-length structures (e.g., strings), and "compound datatypes." A compound datatype is a record structure, whose fields can be any other datatype, including other compound types. Datasets and groups can contain attributes, which are simple name/value pairs for storing metadata. Finally, groups can be linked to any dataset or group in an HDF file, making it possible to show relationships among objects. These HDF objects allowed us to create an HDF file whose structure matched the structure and content of the Gene Model structure. Although the content of a Gene Model file is quite extensive and complex, the grouping and dataset structure of HDF makes it very easy to see the overall organization of the experiment. Since all the pieces are in one place, an application, or someone browsing the file, can easily find and access the particular data of interest. The figure to the left shows the HDF5 file structure we used. The ovals represent groups and the rectangles represent datasets. The grayed out groups (Genotyping, Expression, Proteomics) were not implemented.

Accessing the Data - HDF5's feasibility, and several advantageous features, are demonstrated by a screen shot obtained using HDFView, a cross platform Java-based application, that can be used view data stored in HDF5. This image below highlights the ability of HDF5, and HDF5-supporting technologies to meet the following requirements:

- Support combining a large number of files

- Provide simple navigation and access to data objects

- Support data analysis

- Integrate diverse data types

The left most panel of the screen (below) presents an "explorer" view of four HDF5 files (HapMap, LD, ADRB2, and FVIII), with their accompanying groups and datasets. Today, researchers store these data in separate files scattered throughout file systems. To share results with a colleague, they e-mail multiple spreadsheets or tab-delimited text files for each table of data. When all of the sequence data, basecall tables, assemblies, and genotype information are considered, the number of files becomes significant. For ADRB2 we combined the data from 309 individual files into a single HDF5 file. For FVIII, a genotyping study involving 39 amplicons and 137 patient samples, this number grows to more than 60,000 primary and versioned copied files.

With HDF5 these data are encapsulated in a single file thus simplifying data management in increasing data portability.

Example screen from the prototype demo. HDFView, a JAVA viewer for HDF5 files can display multiple HDF5 files and for each file, the structure of the data in the file. Datasets can be shown as tables, line plots, histograms and images. This example shows a HapMap dataset, LD calculations for a region of chromosome 22 and the data from two resequencing projects. The HapMap dataset (upper left) is a 52,636-row table of alleles from chromosome 22. Below it is an LD plot from the same chromosome. The resequencing projects, adrb2 and factor 8, show reference data and sequencing data. The table (middle) is a subsection of the poly table obtained from Phred. Using the line plot feature in HDFView, sub sections of the table were graphed. The upper right graph compares the called base peak areas (black line, top) to the uncalled peak areas (red, bottom) for the entire trace. The middle right graph highlights the region between 250 and 300 bases. The large peak at position 36 (position 286 in the center table, and top graph) represents a mixed base. The lower right graph is a "SNPshot" showing the trace data surrounding the variation.

In addition to reducing file management complexity, HDF5 and HDFView have a number of data analysis features that make it possible to deliver research-quality applications quickly. In the ADRB2 case, the middle table in the screen shot is a section of one of the basecall tables produced by Phred using its "-d" option. This table was opened by selecting the parent table and defining the columns and region to display. As this is done via HDF5's API, it is easy to envision a program "pulling" relevant slices of data from a data object, performing calculations from the data slices and storing the information back as a table that can be viewed from the interface. Also important, the data in this example are accessible and not "locked" away in a proprietary system. Not only is HDF an open format, HDFView allows one to export data as HDF subsets, images, and tab delimited tables. HDFView's copy functions allow one to easily copy data into other programs like Excel.

HDFView can produce basic line graphs that can be used immediately for data analysis, such as the two that are shown here for ADRB2. The two plots, in the upper right corner of the screen show the areas of the peaks for called (black, upper line) and uncalled (red, lower line) bases. The polymorphic base can be seen in the top plot as a spike in the secondary peak area. The lower graph contains the same data, plotted from the region between base 250 and 300 of the read. This plot shows a high secondary peak with a concomitant reduction in the primary peak area. One of PolyPhred's criteria for identifying a heterozygous base, that primary and secondary peak areas are similar, is easily observed. The significance of this demonstration is that HDF5 and HDFView have significant potential in algorithm development, because they can provide rapid access to different views of the data.

More significantly HDFView was used without any modifications demonstrating the benefit of a standard implementation system like HDF5.

Combining Diverse Data - The screen shot also shows the ability of HDF5 to combine and present diverse data types. Data from a single file containing both SNP discovery projects are shown, in addition to HapMap data (chromosome 22) and an LD plot consisting of a 1000 x 1000 array of LD values from a region of chromosome 22.

As we worked on this project, we became more aware of the technology limitations that hinder work with the HapMap and very large LD datasets and concluded that the HapMap data would provide an excellent test case for evaluating the ability of HDF5 to handle extremely large datasets.

For this test, a chromosome 22 genotype dataset was obtained from HapMap.org. Uncompressed, this is a large file (~24MB), consisting of a row of header information followed by approximately 52,000 rows of genotyped data. As a text file, it is virtually indecipherable and needs to be parsed and converted to a tabular data structure before the data can be made useful. When one considers that even for the smallest chromosome, the dataset is close to Microsoft Excel's (2003, 2004) row limit (65,535), the barrier to entry for the average biologist wishing to the use this data is quite high.

For this test, a chromosome 22 genotype dataset was obtained from HapMap.org. Uncompressed, this is a large file (~24MB), consisting of a row of header information followed by approximately 52,000 rows of genotyped data. As a text file, it is virtually indecipherable and needs to be parsed and converted to a tabular data structure before the data can be made useful. When one considers that even for the smallest chromosome, the dataset is close to Microsoft Excel's (2003, 2004) row limit (65,535), the barrier to entry for the average biologist wishing to the use this data is quite high.

To put the data into HDF5 we made an XML schema of the structure to understand the model, built a parser, and loaded the data. As can be seen in HDFView (Fig. 6), the data were successfully converted from a difficult-to-read text form to a well-structured table where all of

To put the data into HDF5 we made an XML schema of the structure to understand the model, built a parser, and loaded the data. As can be seen in HDFView (Fig. 6), the data were successfully converted from a difficult-to-read text form to a well-structured table where all of

the data can be displayed. At this point, HDFView can be used to extract and analyze subsets of information.

The LD test demonstrates the power of HDF5's collection of sophisticated storage structures. We have seen that we can compress datasets inside an HDF5 file, but we also see that compressing an entire dataset creates access problems. HDF5 deals with this problem through a storage feature called "chunking." Whereas most file formats store an array as a contiguous stream of bytes, chunking in HDF5 involves dividing a dataset into equal-sized "chunks" that are stored and accessed separately.

The LD test demonstrates the power of HDF5's collection of sophisticated storage structures. We have seen that we can compress datasets inside an HDF5 file, but we also see that compressing an entire dataset creates access problems. HDF5 deals with this problem through a storage feature called "chunking." Whereas most file formats store an array as a contiguous stream of bytes, chunking in HDF5 involves dividing a dataset into equal-sized "chunks" that are stored and accessed separately.

LD plot for chromosome 22. A 1000 x 1000 point array of LD calculations is shown. The table in the upper right shows the LD data for a very small region of the plot, demonstrating HDF's ability to allow one to easily select "slices" of datasets.

Chunking has many important benefits, two of which apply particularly to large LD arrays. Chunking makes it possible to achieve good performance when accessing subsets of the datasets, even when the chosen subset is orthogonal to the normal storage order of the dataset. If a very large LD array is stored contiguously, most sub-setting operations require a large number of accesses, as data elements with spatial locality can be stored a long way from one another on a disk, requiring many seeks and reads. With chunking, spatial locality is preserved in the storage structure, resulting in faster access to subsets. When a data subset is accessed, only the chunks that contain specific portions of the data need to be un-compressed. The extra memory and time required to uncompress the entire LD array are both avoided.